Chapter 12 K-Neariest Neighber

Method we introduced before usually have very clear definition of what the parameters are. In a linear model, we have a set of parameters \(\boldsymbol{\beta}\) and our estimated function value, for any target point \(x_0\) is \(x_0^\text{T}\boldsymbol{\beta}\). As the model gets more complicated, we may need models that can be even more flexible. An example we saw previously is the smoothing spline, in which the features (knots) are adaptively defined using the observed samples, and there are \(n\) parameters. Many of the nonparametric models shares this property. Probably the simplest one of them is the \(K\) nearest neighbor (KNN) method, which is based on the idea of local averaging, i.e., we estimate \(f(x_0)\) using observations close to \(x_0\). KNN can be used for both regression and classification problems. This is traditionally called nonparametric models in statistics.

12.1 Definition

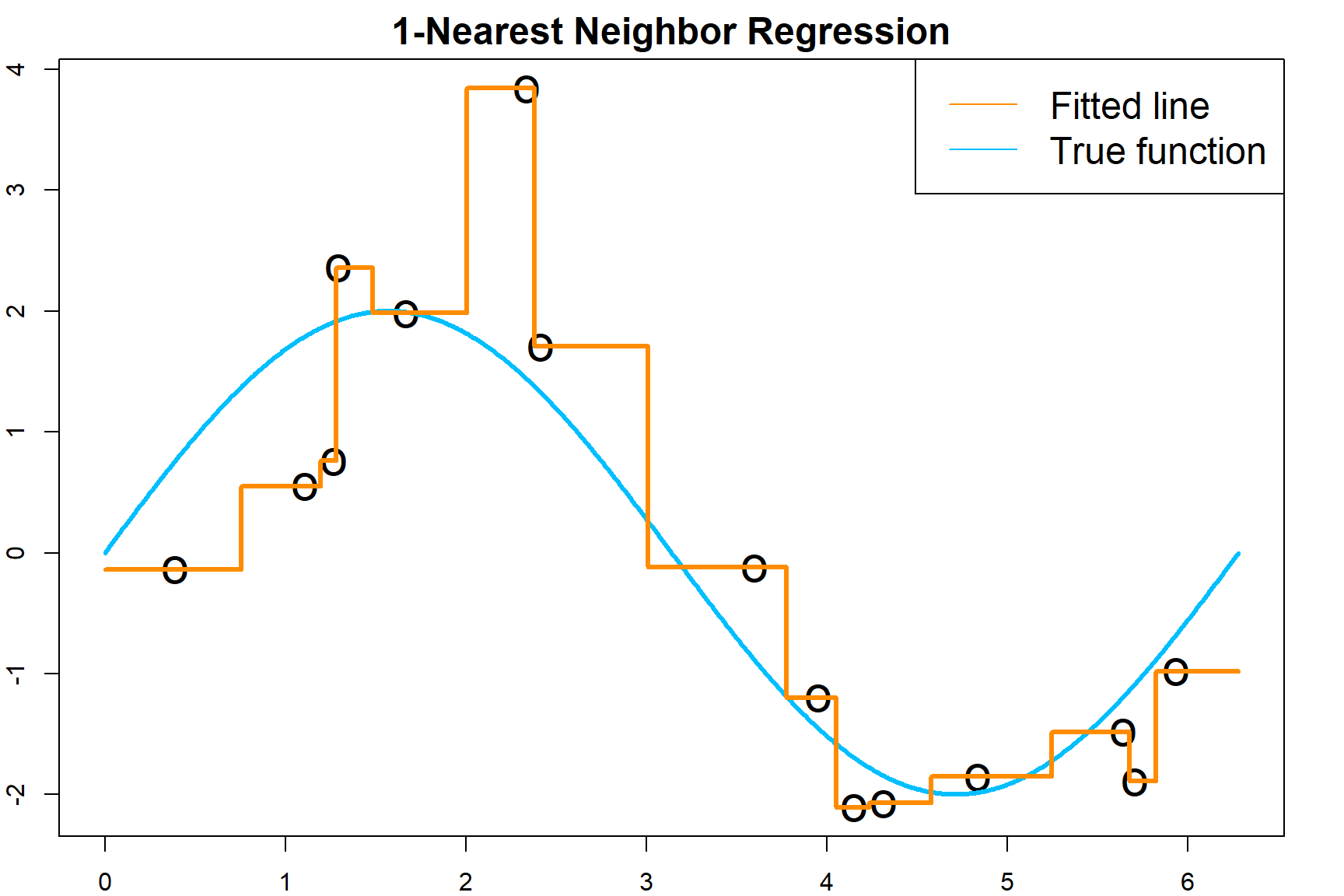

Suppose we collect a set of observations \(\{x_i, y_i\}_{i=1}^n\), the prediction at a new target point \(x_0\) is

\[\widehat y = \frac{1}{k} \sum_{x_i \in N_k(x_0)} y_i,\] where \(N_k(x_0)\) defines the \(k\) samples from the training data that are closest to \(x_0\). As default, closeness is defined using a distance measure, such as the Euclidean distance. Here is a demonstration of the fitted regression function.

# generate training data with 2*sin(x) and random Gaussian errors

set.seed(1)

x <- runif(15, 0, 2*pi)

y <- 2*sin(x) + rnorm(length(x))

# generate testing data points where we evaluate the prediction function

test.x = seq(0, 1, 0.001)*2*pi

# "1-nearest neighbor" regression using kknn package

library(kknn)

knn.fit = kknn(y ~ x, train = data.frame(x = x, y = y),

test = data.frame(x = test.x),

k = 1, kernel = "rectangular")

test.pred = knn.fit$fitted.values

# plot the data

par(mar=rep(2,4))

plot(x, y, xlim = c(0, 2*pi), pch = "o", cex = 2,

xlab = "", ylab = "", cex.lab = 1.5)

title(main="1-Nearest Neighbor Regression", cex.main = 1.5)

# plot the true regression line

lines(test.x, 2*sin(test.x), col = "deepskyblue", lwd = 3)

# plot the fitted line

lines(test.x, test.pred, type = "s", col = "darkorange", lwd = 3)

legend("topright", c("Fitted line", "True function"),

col = c("darkorange", "deepskyblue"), lty = 1, cex = 1.5)

12.2 Tuning \(k\)

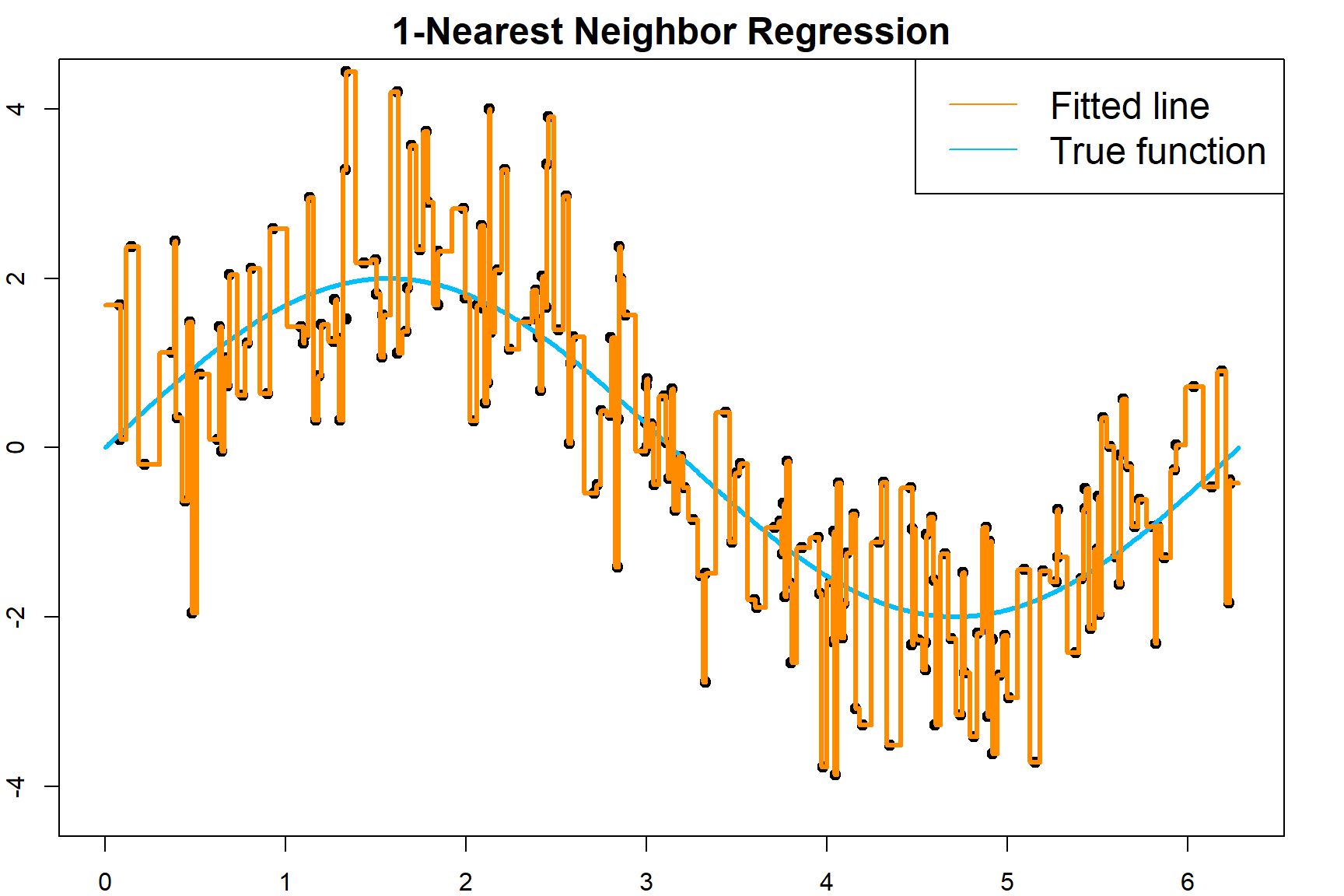

Tuning \(k\) is crucial for \(k\)NN. Let’s observe its effect. This time, I generate 200 observations. For 1NN, the fitted regression line is very jumpy.

# generate more data

set.seed(1)

n = 200

x <- runif(n, 0, 2*pi)

y <- 2*sin(x) + rnorm(length(x))

test.y = 2*sin(test.x) + rnorm(length(test.x))

# 1-nearest neighbor

knn.fit = kknn(y ~ x, train = data.frame(x = x, y = y),

test = data.frame(x = test.x),

k = 1, kernel = "rectangular")

test.pred = knn.fit$fitted.values

We can evaluate the prediction error of this model:

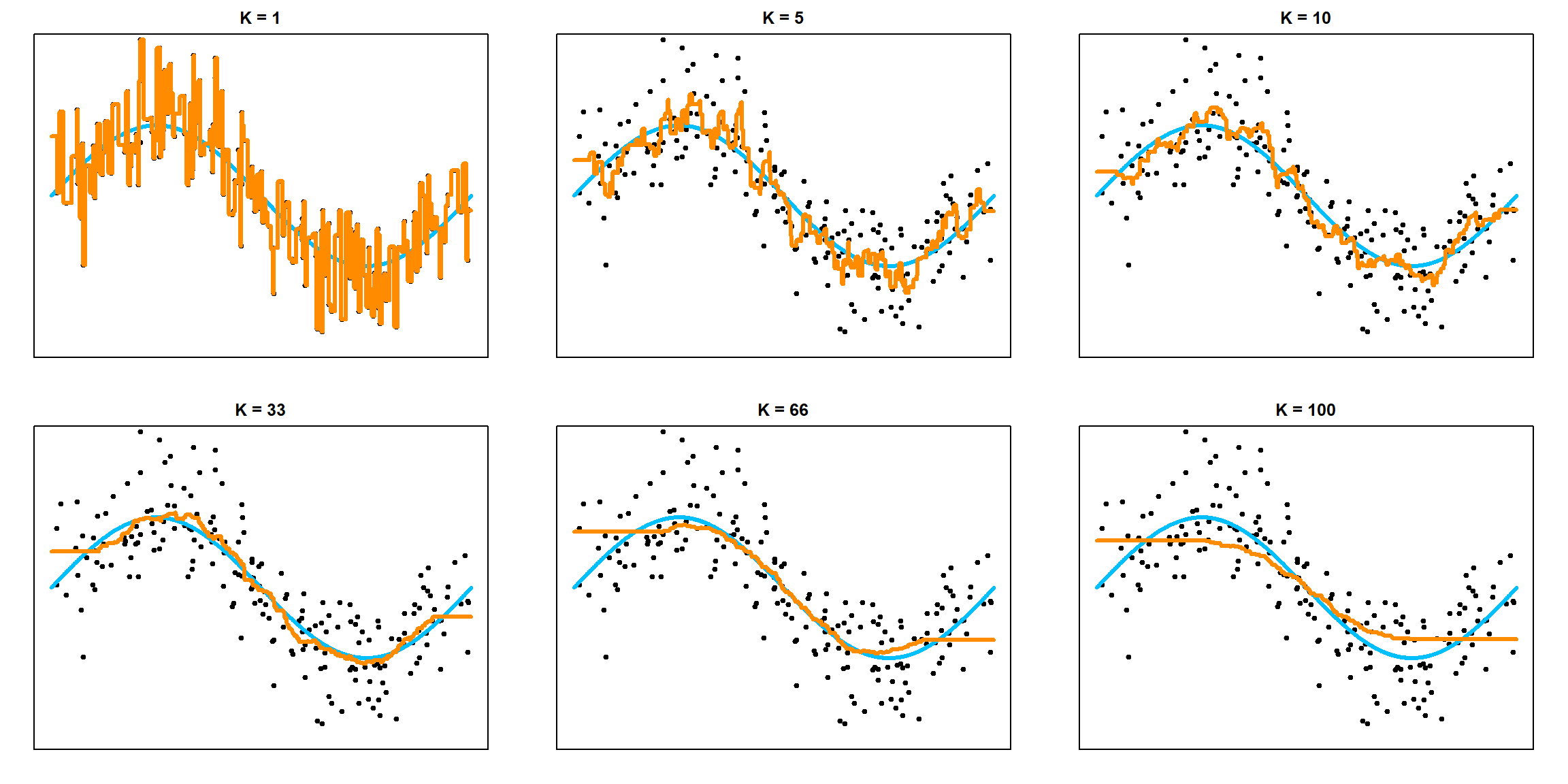

If we consider different values of \(k\), we can observe the trade-off between bias and variance.

## Prediction Error for K = 1 : 2.09748780881932

## Prediction Error for K = 5 : 1.39071867992277

## Prediction Error for K = 10 : 1.24696415340282

## Prediction Error for K = 33 : 1.21589627474692

## Prediction Error for K = 66 : 1.37604707375972

## Prediction Error for K = 100 : 1.42868908518756

As \(k\) increases, we have a more stable model, i.e., smaller variance. However, the bias is also increased. As \(k\) decreases, the bias decreases, but the model is less stable.

12.3 The Bias-variance Trade-off

Formally, the prediction error (at a given target point \(x_0\)) can be broke down into three parts: the irreducible error, the bias squared, and the variance.

\[\begin{aligned} \text{E}\Big[ \big( Y - \widehat f(x_0) \big)^2 \Big] &= \text{E}\Big[ \big( Y - f(x_0) + f(x_0) - \text{E}[\widehat f(x_0)] + \text{E}[\widehat f(x_0)] - \widehat f(x_0) \big)^2 \Big] \\ &= \text{E}\Big[ \big( Y - f(x_0) \big)^2 \Big] + \text{E}\Big[ \big(f(x_0) - \text{E}[\widehat f(x_0)] \big)^2 \Big] + \text{E}\Big[ \big(E[\widehat f(x_0)] - \widehat f(x_0) \big)^2 \Big] + \text{Cross Terms}\\ &= \underbrace{\text{E}\Big[ ( Y - f(x_0))^2 \big]}_{\text{Irreducible Error}} + \underbrace{\Big(f(x_0) - \text{E}[\widehat f(x_0)]\Big)^2}_{\text{Bias}^2} + \underbrace{\text{E}\Big[ \big(\widehat f(x_0) - \text{E}[\widehat f(x_0)] \big)^2 \Big]}_{\text{Variance}} \end{aligned}\]As we can see from the previous example, when \(k=1\), the prediction error is about 2. This is because for all the testing points, the theoretical irreducible error is 1 (variance of the error term), the bias is almost 0 since the function is smooth, and the variance is the variance of 1 nearest neighbor, which is again 1. On the other extreme side, when \(k = n\), the variance should be in the level of \(1/n\), the bias is the difference between the sin function and the overall average. Overall, we can expect the trend:

- As \(k\) increases, bias increases and variance decreases

- As \(k\) decreases, bias decreases and variance increases

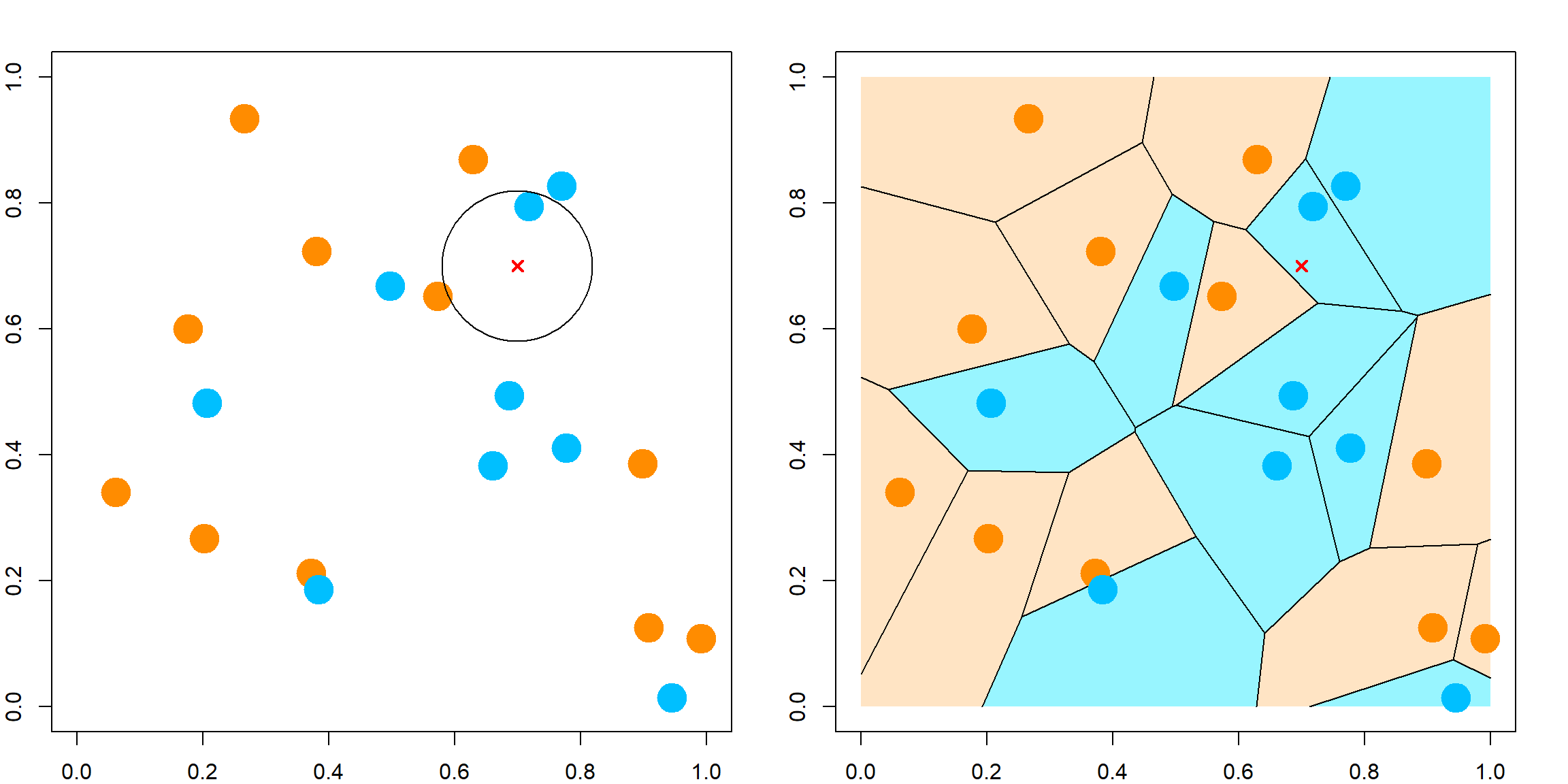

12.4 KNN for Classification

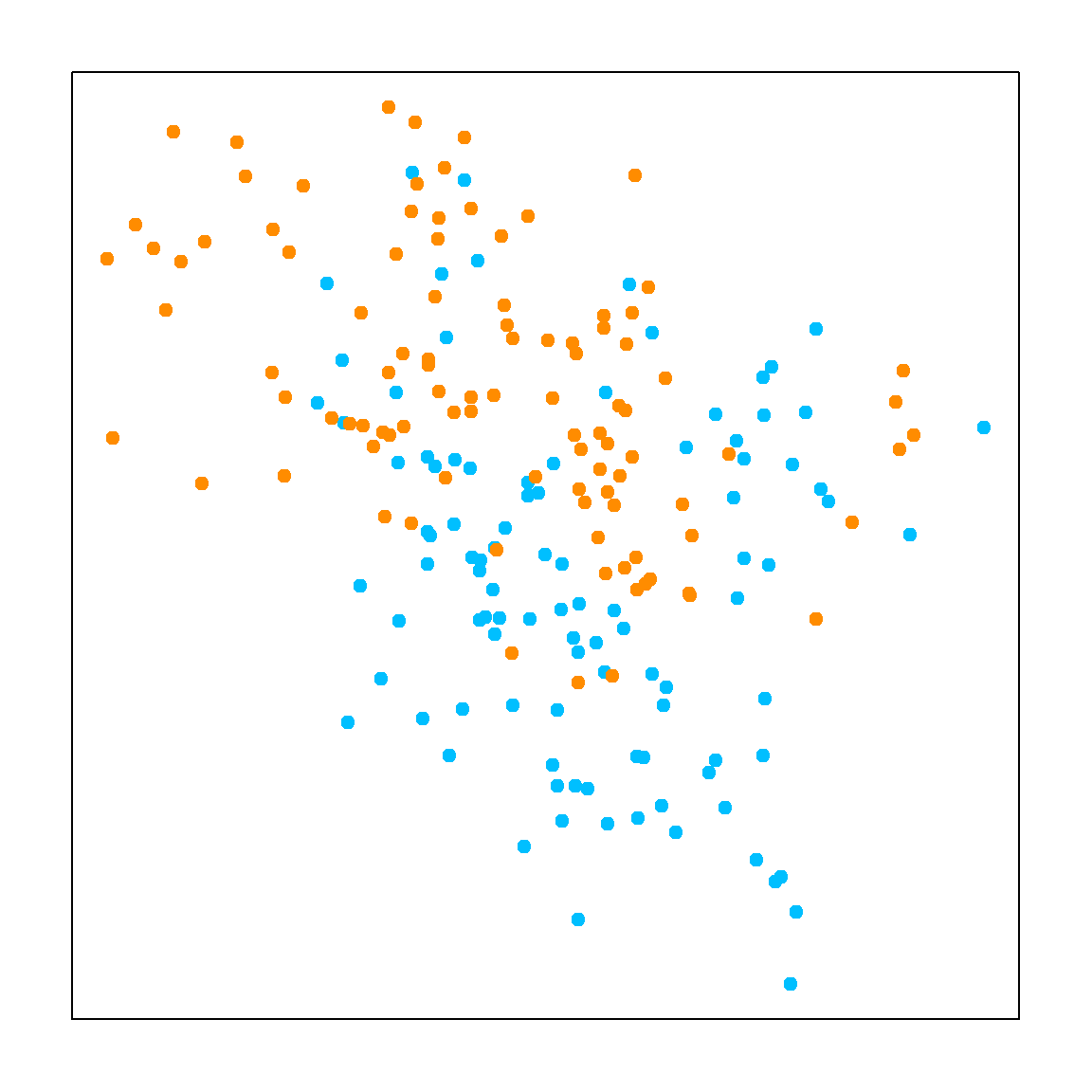

For classification, KNN is different from the regression model in term of finding neighbers. The only difference is to majority voting instead of averaging. Majority voting means that we look for the most popular class label among its neighbors. For 1NN, it is simply the class of the closest neighbor. The visualization of 1NN is a Voronoi tessellation. The plot on the left is some randomly observed data in \([0, 1]^2\), and the plot on the right is the corresponding 1NN classification model.

12.5 Example 1: An artificial data

We use artificial data from the ElemStatLearn package.

library(ElemStatLearn)

x <- mixture.example$x

y <- mixture.example$y

xnew <- mixture.example$xnew

par(mar=rep(2,4))

plot(x, col=ifelse(y==1, "darkorange", "deepskyblue"),

axes = FALSE, pch = 19)

box()

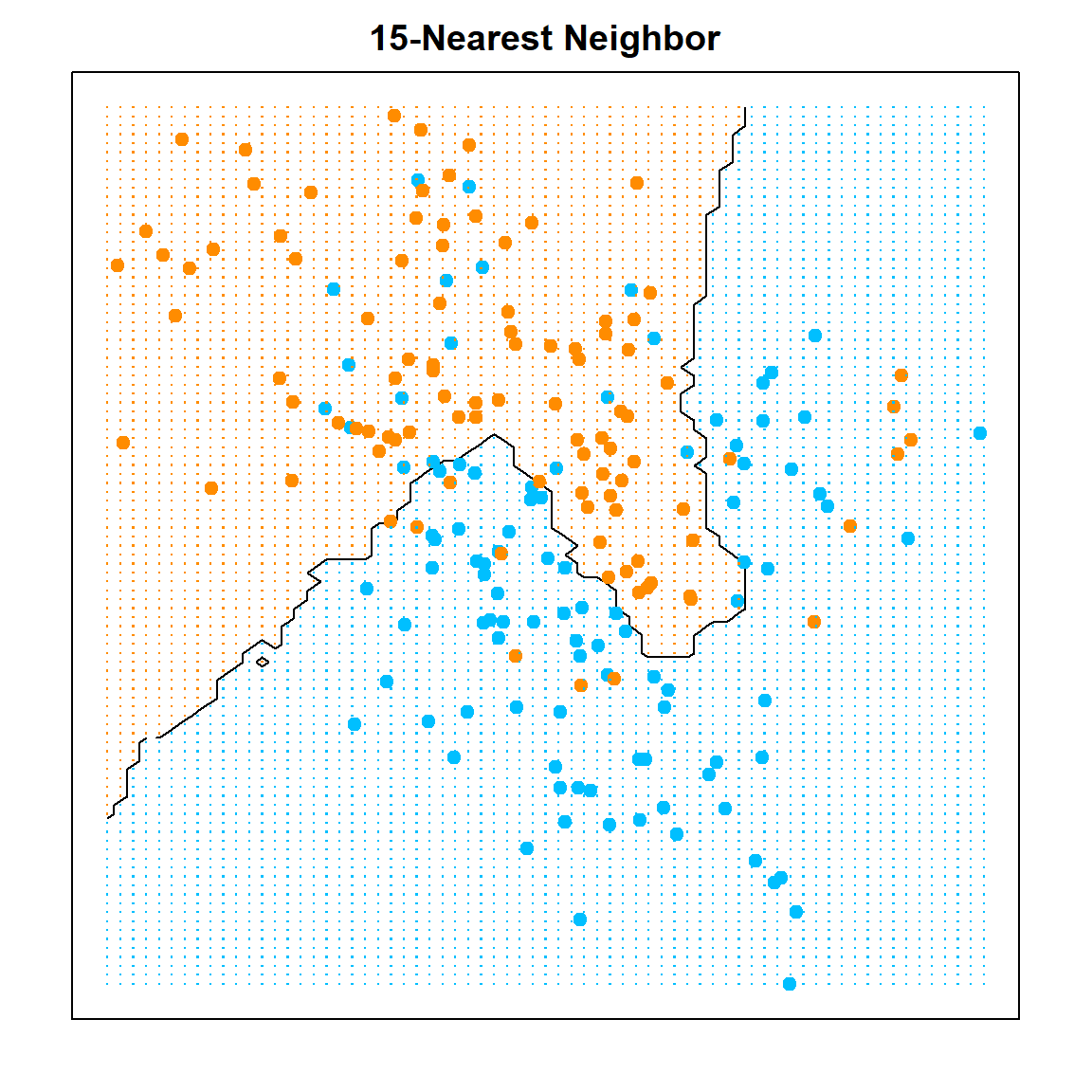

The decision boundary is highly nonlinear. We can utilize the contour() function to demonstrate the result.

# knn classification

k = 15

knn.fit <- knn(x, xnew, y, k=k)

px1 <- mixture.example$px1

px2 <- mixture.example$px2

pred <- matrix(knn.fit == "1", length(px1), length(px2))

contour(px1, px2, pred, levels=0.5, labels="",axes=FALSE)

box()

title(paste(k, "-Nearest Neighbor", sep= ""))

points(x, col=ifelse(y==1, "darkorange", "deepskyblue"), pch = 19)

mesh <- expand.grid(px1, px2)

points(mesh, pch=".", cex=1.2, col=ifelse(pred, "darkorange", "deepskyblue"))

We can evaluate the in-sample prediction result of this model using a confusion matrix:

# the confusion matrix

knn.fit <- knn(x, x, y, k = 15)

xtab = table(knn.fit, y)

library(caret)

confusionMatrix(xtab)

## Confusion Matrix and Statistics

##

## y

## knn.fit 0 1

## 0 82 13

## 1 18 87

##

## Accuracy : 0.845

## 95% CI : (0.7873, 0.8922)

## No Information Rate : 0.5

## P-Value [Acc > NIR] : <2e-16

##

## Kappa : 0.69

##

## Mcnemar's Test P-Value : 0.4725

##

## Sensitivity : 0.8200

## Specificity : 0.8700

## Pos Pred Value : 0.8632

## Neg Pred Value : 0.8286

## Prevalence : 0.5000

## Detection Rate : 0.4100

## Detection Prevalence : 0.4750

## Balanced Accuracy : 0.8450

##

## 'Positive' Class : 0

## 12.6 Degrees of Freedom

From our ridge lecture, we have a definition of the degrees of freedom. For a linear model, the degrees of freedom is \(p\). For KNN, we can simply verify this by

\[\begin{align} \text{DF}(\widehat{f}) =& \frac{1}{\sigma^2} \sum_{i = 1}^n \text{Cov}(\widehat{y}_i, y_i)\\ =& \frac{1}{\sigma^2} \sum_{i = 1}^n \frac{\sigma^2}{k}\\ =& \frac{n}{k} \end{align}\]

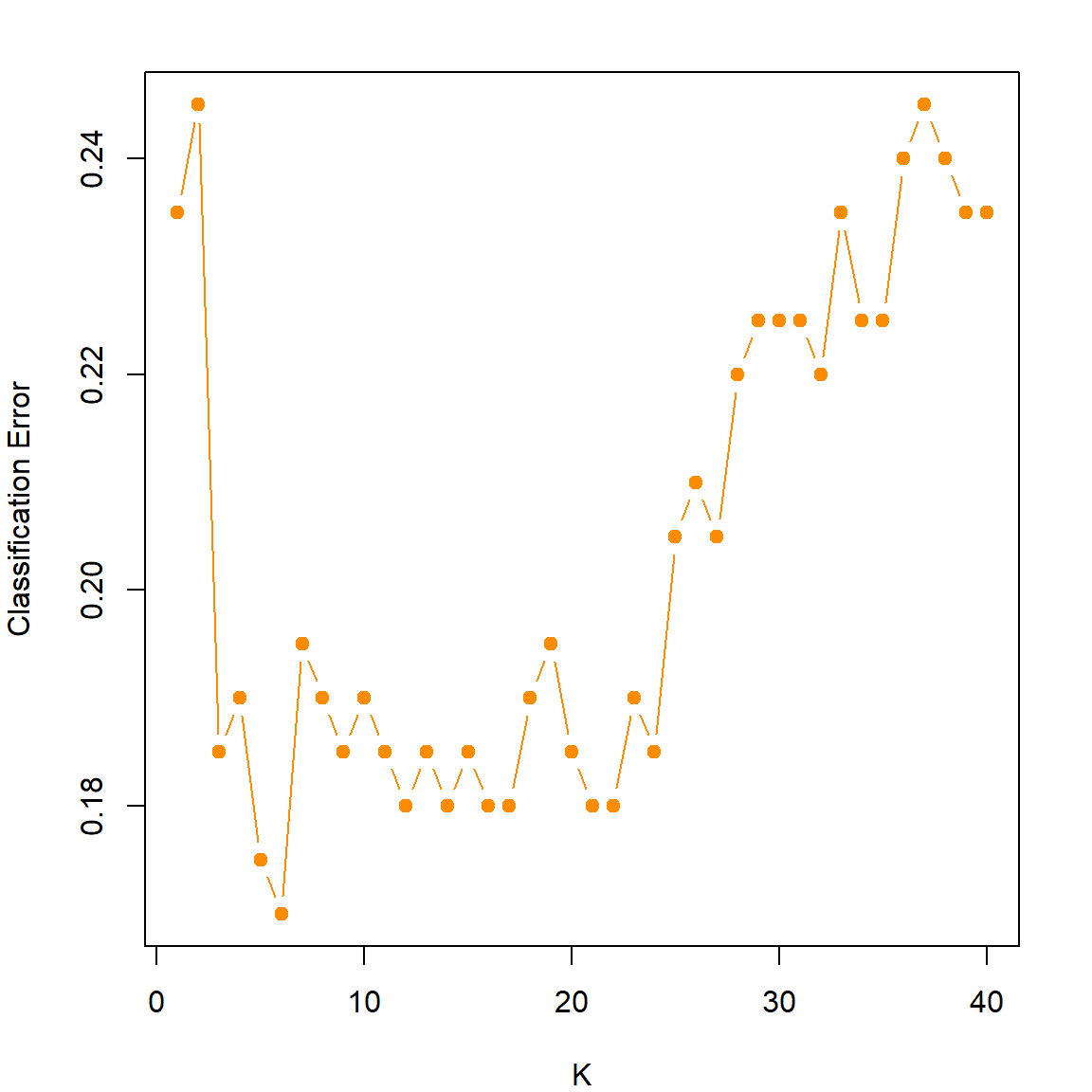

12.7 Tuning with the caret Package

The caret package has some built-in feature that can tune some popular machine learning models using cross-validation. The cross-validation settings need to be specified using the \(trainControl()\) function.

There are other cross-validation methods, such as repeatedcv the repeats the CV several times, and leave-one-out CV LOOCV. For more details, you can read the documentation. We can then setup the training by specifying a grid of \(k\) values, and also the CV setup. Make sure that you specify method = "knn" and also construct the outcome as a factor in a data frame.

set.seed(1)

knn.cvfit <- train(y ~ ., method = "knn",

data = data.frame("x" = x, "y" = as.factor(y)),

tuneGrid = data.frame(k = seq(1, 40, 1)),

trControl = control)

plot(knn.cvfit$results$k, 1-knn.cvfit$results$Accuracy,

xlab = "K", ylab = "Classification Error", type = "b",

pch = 19, col = "darkorange")

Print out the fitted object, we can see that the best \(k\) is 6. And there is a clear “U” shaped pattern that shows the potential bias-variance trade-off.

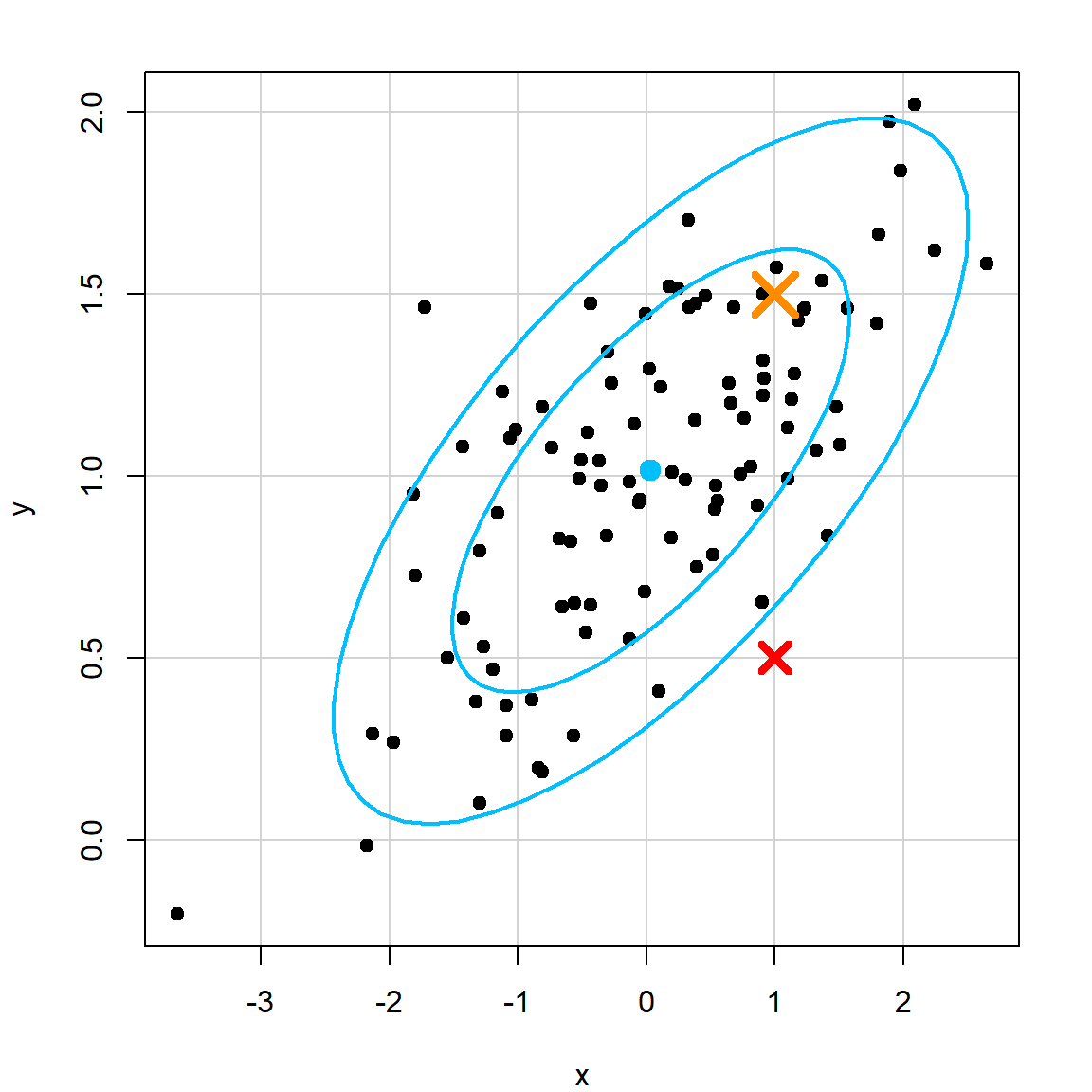

12.8 Distance Measures

Closeness between two points needs to be defined based on some distance measures. By default, we use the squared Euclidean distance (\(\ell_2\) norm) for continuous variables:

\[d^2(\mathbf{u}, \mathbf{v}) = \lVert \mathbf{u}- \mathbf{v}\rVert_2^2 = \sum_{j=1}^p (u_j, v_j)^2.\] However, this measure is not scale invariant. A variable with large scale can dominate this measure. Hence, we often consider a normalized version:

\[d^2(\mathbf{u}, \mathbf{v}) = \sum_{j=1}^p \frac{(u_j, v_j)^2}{\sigma_j^2},\] where \(\sigma_j^2\) can be estimated using the sample variance of variable \(j\). Another choice that further taking the covariance structure into consideration is the Mahalanobis distance:

\[d^2(\mathbf{u}, \mathbf{v}) = (\mathbf{u}- \mathbf{v})^\text{T}\Sigma^{-1} (\mathbf{u}- \mathbf{v}),\] where \(\Sigma\) is the covariance matrix, and can be estimated using the sample covariance. In the following plot, the red cross and orange cross have the same Euclidean distance to the center. However, the red cross is more of a “outlier” based on the joint distribution. The Mahalanobis distance would reflect this.

x=rnorm(100)

y=1 + 0.3*x + 0.3*rnorm(100)

library(car)

dataEllipse(x, y, levels=c(0.6, 0.9), col = c("black", "deepskyblue"), pch = 19)

points(1, 0.5, col = "red", pch = 4, cex = 2, lwd = 4)

points(1, 1.5, col = "darkorange", pch = 4, cex = 3, lwd = 4)

For categorical variables, the Hamming distance is commonly used:

\[d(\mathbf{u}, \mathbf{v}) = \sum_{j=1}^p I(u_j \neq v_j).\] It simply counts how many entries are not the same.

12.9 1NN Error Bound

We can show that 1NN can achieve reasonable performance for fixed \(p\), as \(n \rightarrow \infty\) by showing that the 1NN error is no more than twice of the Bayes error, which is the smaller one of \(P(Y = 1 | X = x_0)\) and \(1 - P(Y = 1 | X = x_0)\).

Let’s denote \(x_{1nn}\) the closest neighbor of \(x_0\), we have \(d(x_0, x_{1nn}) \rightarrow 0\) by the law of large numbers. Assuming smoothness, we have \(P(Y = 1 | x_{1nn}) \rightarrow P(Y = 1 | x_0)\). Hence, the 1NN error is the chance we make a wrong prediction, which can happen in two situations. WLOG, let’s assume that \(P(Y = 1 | X = x_0)\) is larger than \(1-P(Y = 1 | X = x_0)\), then

\[\begin{align} & \,P(Y=1|x_0)[ 1 - P(Y=1|x_{1nn})] + P(Y=1|x_0)[ 1 - P(Y=1|x_{1nn})]\\ \leq & \,[ 1 - P(Y=1|x_{1nn})] + [ 1 - P(Y=1|x_{1nn})]\\ \rightarrow & \, 2[ 1 - P(Y=1|x_0)]\\ = & \,2 \times \text{Bayes Error}\\ \end{align}\]

This is a crude bound, but shows that 1NN can still be a reasonable estimator when the noise is small (Bayes error small).

12.10 Example 2: Handwritten Digit Data

Let’s consider another example using the handwritten digit data. Each observation in this data is a \(16 \times 16\) pixel image. Hence, the total number of variables is \(256\). At each pixel, we have the gray scale as the numerical value.

# Handwritten Digit Recognition Data

library(ElemStatLearn)

# the first column is the true digit

dim(zip.train)

## [1] 7291 257

dim(zip.test)

## [1] 2007 257

# look at one sample

image(zip2image(zip.train, 1), col=gray(256:0/256), zlim=c(0,1),

xlab="", ylab="", axes = FALSE)

## [1] "digit 6 taken"

We use 3NN to predict all samples in the testing data. The model is fairly accurate.

# fit 3nn model and calculate the error

knn.fit <- knn(zip.train[, 2:257], zip.test[, 2:257], zip.train[, 1], k=3)

# overall prediction error

mean(knn.fit != zip.test[,1])

## [1] 0.05430992

# the confusion matrix

table(knn.fit, zip.test[,1])

##

## knn.fit 0 1 2 3 4 5 6 7 8 9

## 0 355 0 6 1 0 3 3 0 4 2

## 1 0 258 0 0 2 0 0 1 0 0

## 2 1 0 182 2 0 2 1 1 2 0

## 3 0 0 1 153 0 4 0 1 5 0

## 4 0 3 1 0 183 0 2 4 0 3

## 5 0 0 0 7 2 146 0 0 1 0

## 6 0 2 2 0 2 0 164 0 0 0

## 7 2 1 2 1 2 0 0 138 1 4

## 8 0 0 4 0 1 1 0 1 151 0

## 9 1 0 0 2 8 4 0 1 2 16812.11 Curse of Dimensionality

Many of the practical problems we encounter today are high-dimensional. The resolution of the handwritten digit example is \(16 \times 16 = 256\). Genetic studies often involves more than 25K gene expressions, etc. For a given sample size \(n\), as the number of variables \(p\) increases, the data becomes very sparse. Nearest neighbor methods usually do not perform very well on high-dimensional data. This is because for any given target point, there will not be enough training data that lies close enough. To see this, let’s consider a \(p\)-dimensional hyper-cube. Suppose we have \(n=1000\) observations uniformly spread out in this cube, and we are interested in predicting a target point with \(k=10\) neighbors. If these neighbors are really close to the target point, then this would be a good estimation with small bias. Suppose \(p=2\), then we know that if we take a cube (square) with height and width both \(l = 0.1\), then there will be \(1000 \times 0.1^2 = 10\) observations within the square. In general, we have the relationship

\[l^p = \frac{k}{n}\] Try different \(p\), we have

- If \(p = 2\), \(l = 0.1\)

- If \(p = 10\), \(l = 0.63\)

- If \(p = 100\), \(l = 0.955\)

This implies that if we have 100 dimensions, then the nearest 10 observations would be 0.955 away from the target point at each dimension, this is almost at the other corner of the cube. Hence there will be a very large bias. Decreasing \(k\) does not help much in this situation since even the closest point can be really far away, and the model would have large variance.

However, why our model performs well in the handwritten digit example? There is possibly (approximately) a lower dimensional representation of the data so that when you evaluate the distance on the high-dimensional space, it is just as effective as working on the low dimensional space. Dimension reduction is an important topic in statistical learning and machine learning. Many methods, such as sliced inverse regression (Li 1991) and UMAP (McInnes, Healy, and Melville 2018) have been developed based on this concept.