STAT 546 Homework 1¶

Assigned: Apr 16, 2024; Due: 11:59 PM CT, Apr 25, 2024

For general instruction of homework assignments, please refer to our previous homework.

Question 1: Designing a Super Mario Game¶

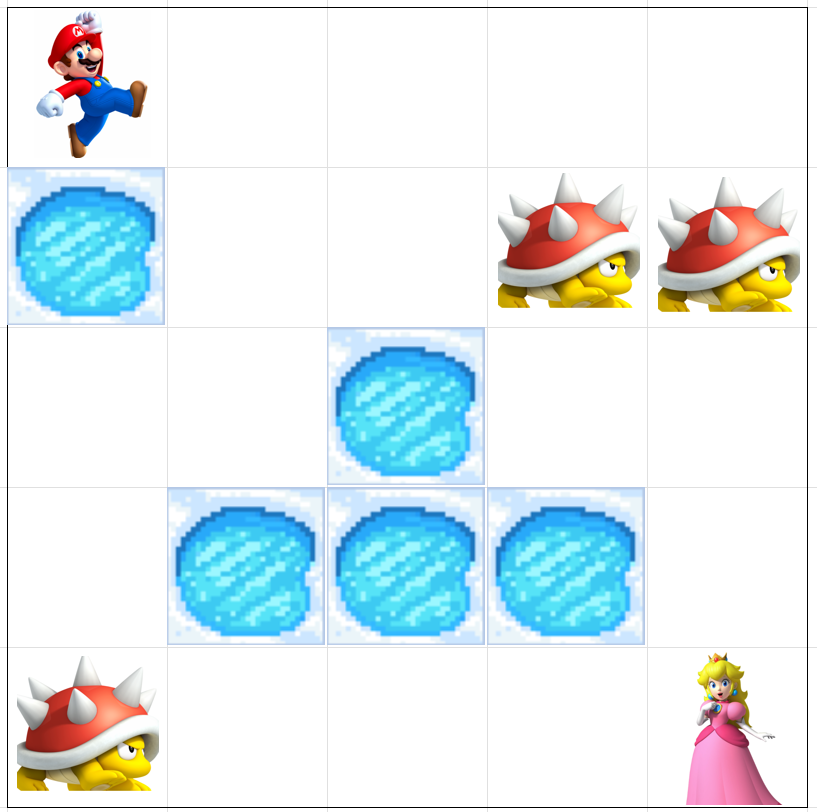

Take a look at our Frozen Lake Python example in the lecture note and adapt the code to design a new super mario game. The goal of the game is of course to reach to princess Peach at the bottom right, starting from the top left. Follow these steps to modify your environment definition (class FrozonLake, or whatever name you want to call it):

- The

grid_sizeshould be 5x5. Keep in mind that indices in python starts from 0. - There are five icy holes, located as shown in the following figure. If Mario steps into a lake, the reward is -0.5. But you will terminate the game, as what the frozen lake game did.

- There are three monster turtles, and reaching them will get an reward of -1, due to fighting them. You may want to add something like

self.turtlesto indicate their locations and then properly return the reward in thestep()function by checking your locations. - The reward for stepping into each empty floor is -0.1 due to spending energy to walk.

- There reward for reaching princess Peach is 10.

After defining this game environment, play the game (using the code provided in the lecture, with minor adjustment) with the following action sequence, and validate that your environment is defined properly.

- RIGHT, RIGHT, DOWN, RIGHT, DOWN, RIGHT, DOWN, DOWN

Question 2:Value Iteration¶

Use the same code of the value iteration provided in our lecture note, estimate the optimal policy. Also perform the following:

- Use a discount factor of 0.9.

- Output the estimated value function each time

iterationsincreases

Report the optimal policy and the estimated value function at each location. Does this match your expectation?

Question 3: Policy Iteration¶

Also run the policy iteration algorithm. But modify the algorithm such that

- Use a discount factor of 0.9.

- It outputs the estimated value function each time

iterincreases in the mainpolicy_iterationfunction

Is the result the final result the same as your value iteration algorithm? Are the intermediate results the same? Can you comment on how the two algorithms differs mechanically?